The researchers of Google team have discovered that Artificial Intelligence if properly conducted can do wonders; it can create inhuman cryptographic schemes, which can be better at encrypting than decryption. As the system of AI can build its own encryption itself, it will be hard for the hackers to crack it.

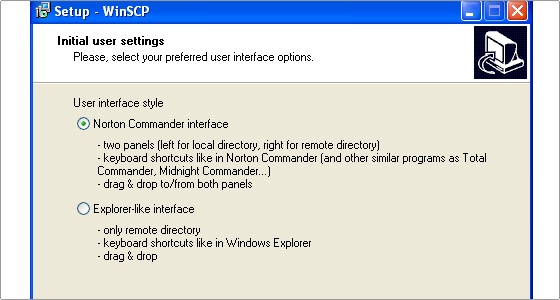

To find out whether artificial intelligence can encrypt on its own the unit of AI researchers at Google Brain focused on deep learning. They built a game where three entities named Alice, Bob and Eve are powered by deep neural networks. Two of the neural network Bob and Alice shared the same secret key. On the flip side, Eve had some luck to decrypt the systems, but as the methods evolved over the time Bob and Alice became more proficient, which enabled to fail her ability to crack down the cipher.

Ultimately it was seen that AI were not good at sending messages, but 15,000 iterations Bob and Alice were devising a solid encryption protocol and Eve had a hell of time to decrypt their communication. Many of their techniques were unexpected and odd, which depended on the calculations which were rarely seen in “human generated” encryption. The outcome of it is that robots will communicate with each other in such a way that other robots or anyone else cannot crack.

This was just a simple test by the researchers of Google Brain, whether AI can be successful at generating its own encryption. Human made encryption is far beyond the reach of cryptography according to the AI systems in this experiment. However, in an era where security on internet is built on cryptography, breaking encryption can be the next security arm in the race.